Elasticache

Introduction

- Fully managed Redis or Memcached instances

- Caches are in-memory databases with high-performance and low latency

- Helps to reduce load from databases

- Helps to make your application stateless

- Requires that your application be architected with a cache in-mind

Redis

- Supports multi-AZ with auto-failover

- Supports read-replicas to scale out reads

- Backup and restore features

- Supports sets and sorted sets

Memcached

- Multi-node for partitioning of data (Sharding)

- None of the features that Redis supports

Caching Implementation Considerations

- Is it safe to cache the data?

- Data may be out of date (eventually consistent)

- Is caching effective for that data?

- Patterns: data changing slowly, few keys are frequently updated

- Anti patterns: data changing rapidly, all large key space frequently needed

- Is data structured for caching?

- example: key value caching or caching of aggregations result

Caching design patterns

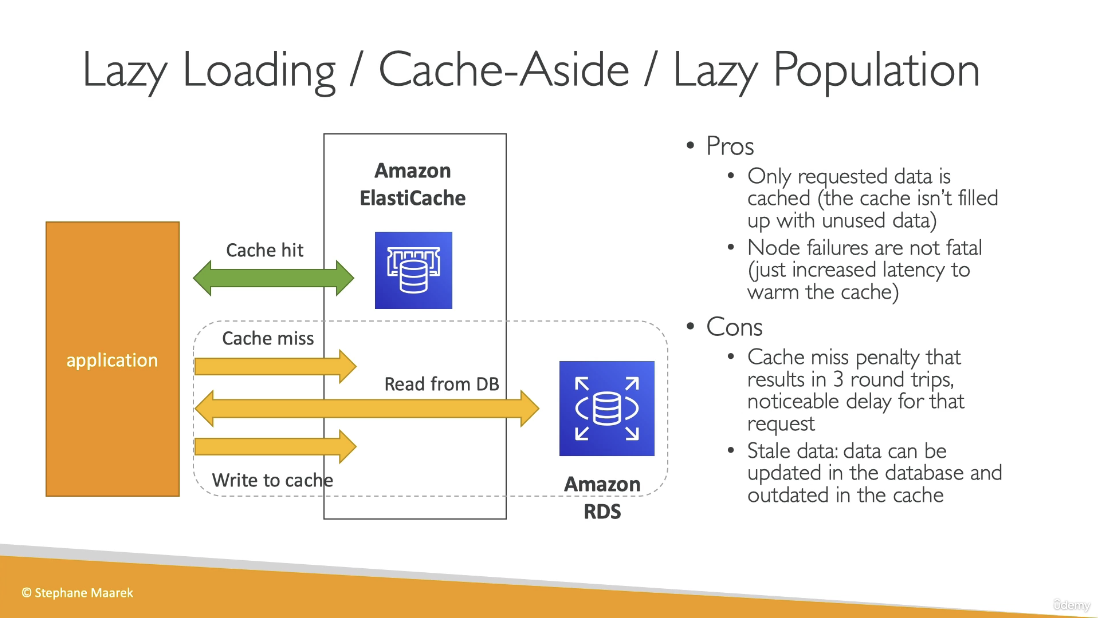

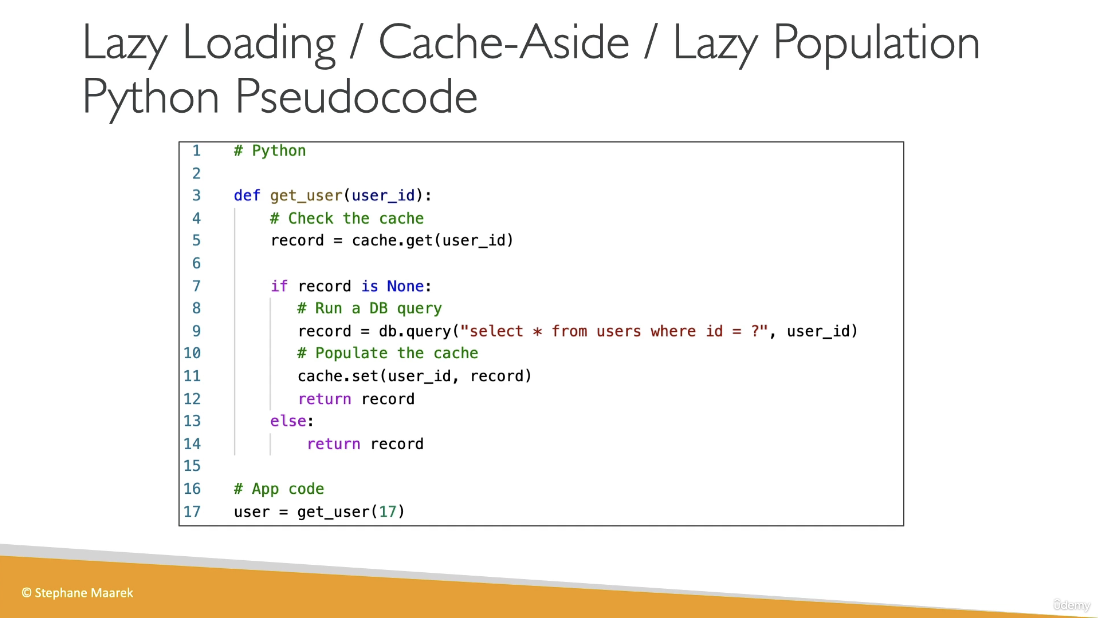

Lazy Loading / Cache-Aside / Lazy Population

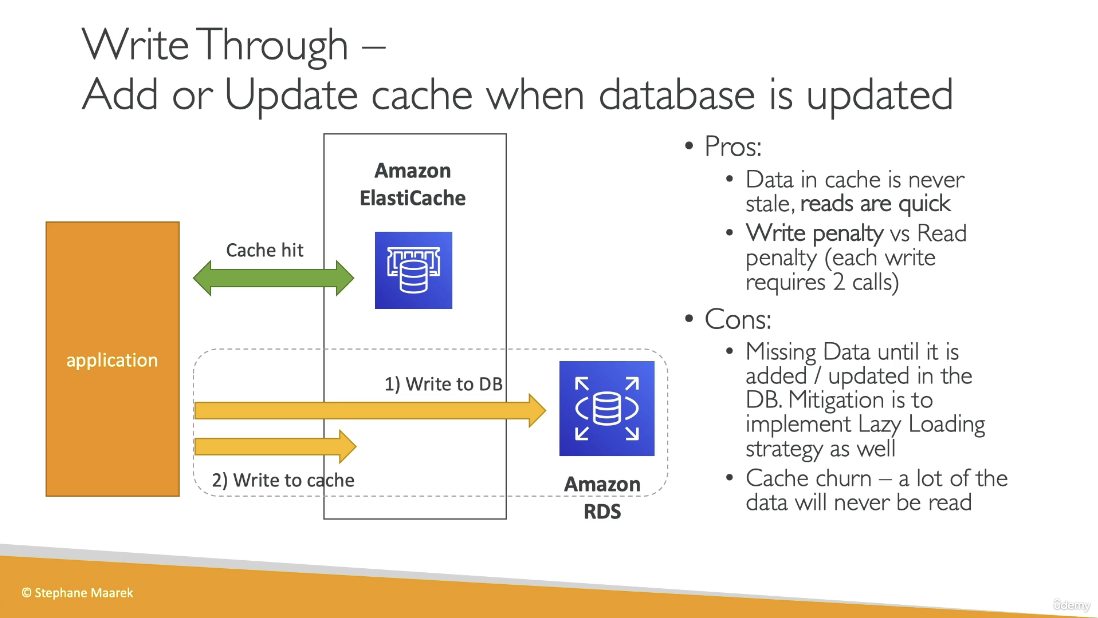

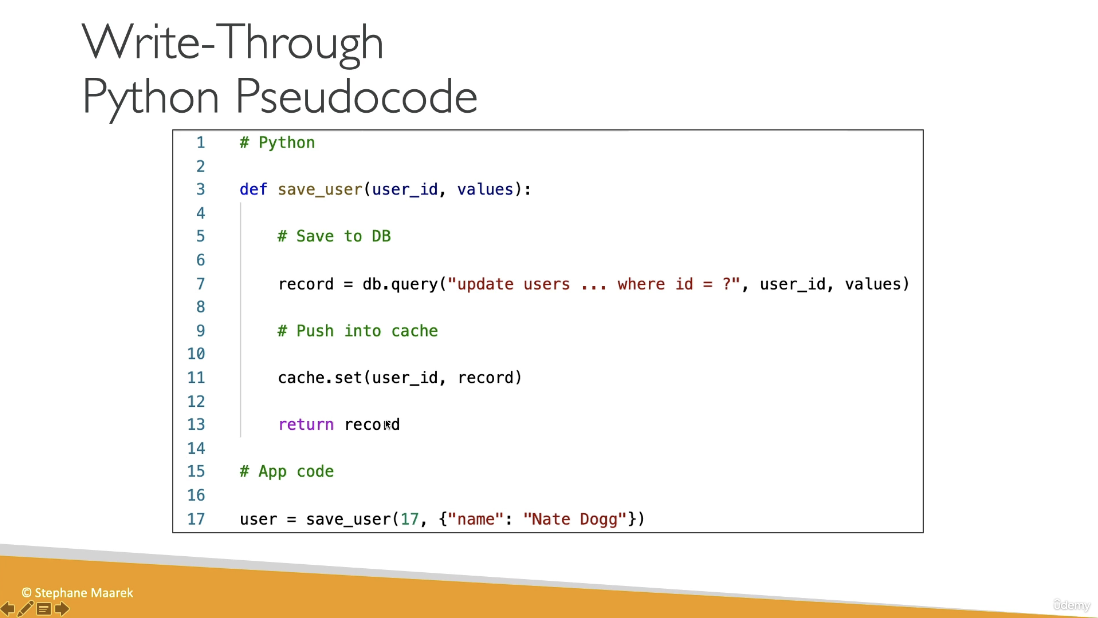

Write-through

Cache Evictions and TTL

- Cache eviction can occur in 3 ways:

- You delete the item in the cache

- Item is evicted because the memory is full and its not in use (LRU)

- The TTL (time to live) has expired

- TTL can range from a few seconds to days